ChatGPT Adult Mode Arrives 2026: OpenAI’s Plan for Mature Content

Image Credit: We-Vibe | Splash

OpenAI has signalled it expects to introduce an “adult mode” in ChatGPT in the first quarter of 2026, with the timing tied to how well its age prediction system can separate adults from users under 18.

In parallel, OpenAI chief executive Sam Altman has said the company will begin allowing “mature content” for users who verify their age starting in December 2025, including “erotica for verified adults,” as part of what he described as a “treat adult users like adults” principle.

What OpenAI Has Confirmed

The clearest confirmed points are about timing and gating, not the exact content scope. Fidji Simo, OpenAI’s CEO of Applications, told reporters that adult mode is expected in the first quarter of 2026 and that OpenAI wants to get better at age prediction before switching on adult features.

Altman’s December 2025 statement, reported by Reuters, frames the near term change as a relaxation for verified adults rather than a blanket policy shift for everyone.

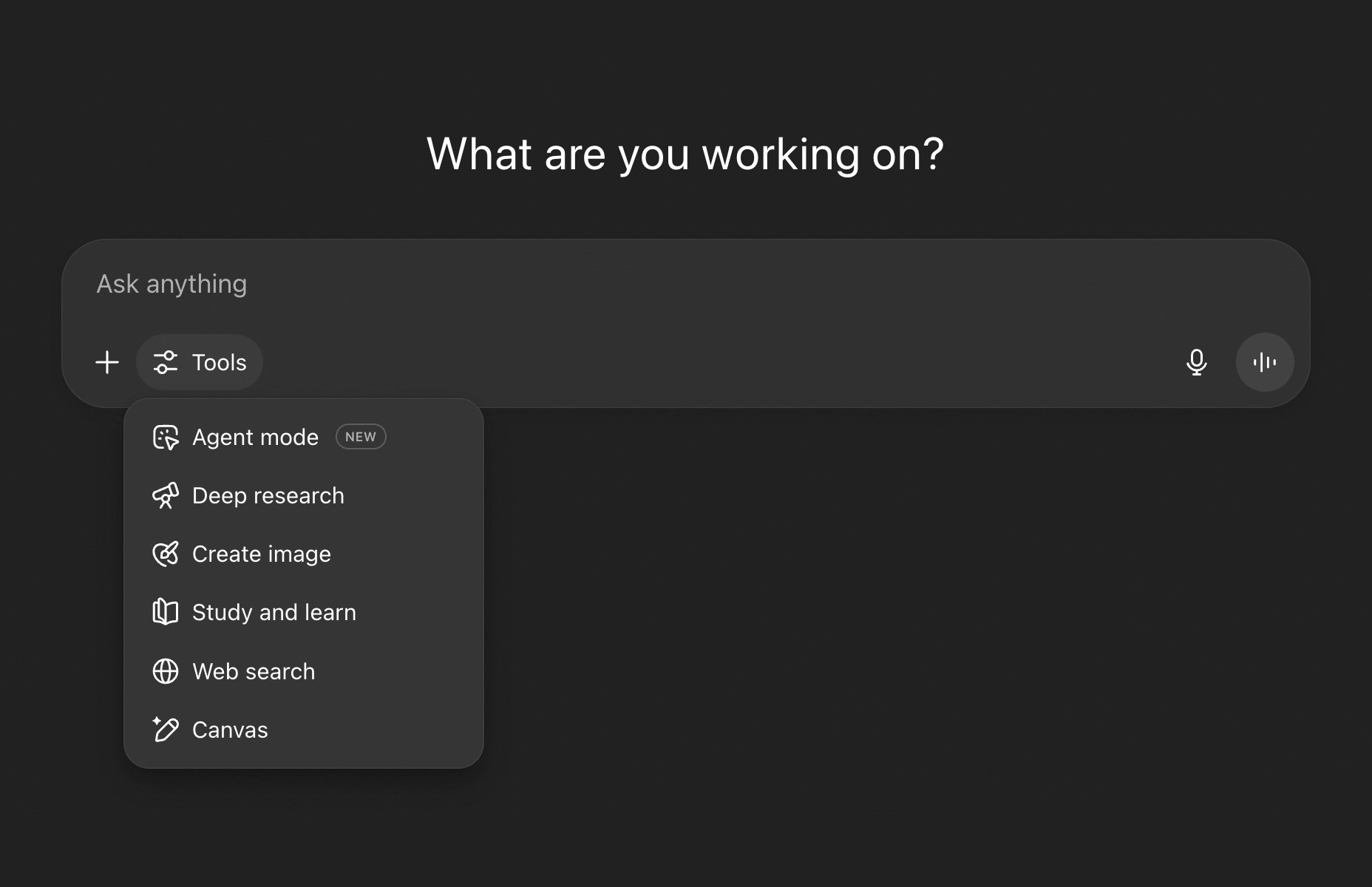

What OpenAI has not yet published is a product spec that defines adult mode in detail (for example, whether it is a single toggle, a separate policy tier, or a set of broader conversation allowances beyond sexual content). Reporting and commentary may speculate, but the sources above do not provide a definitive feature breakdown.

Age Prediction Plus Verification

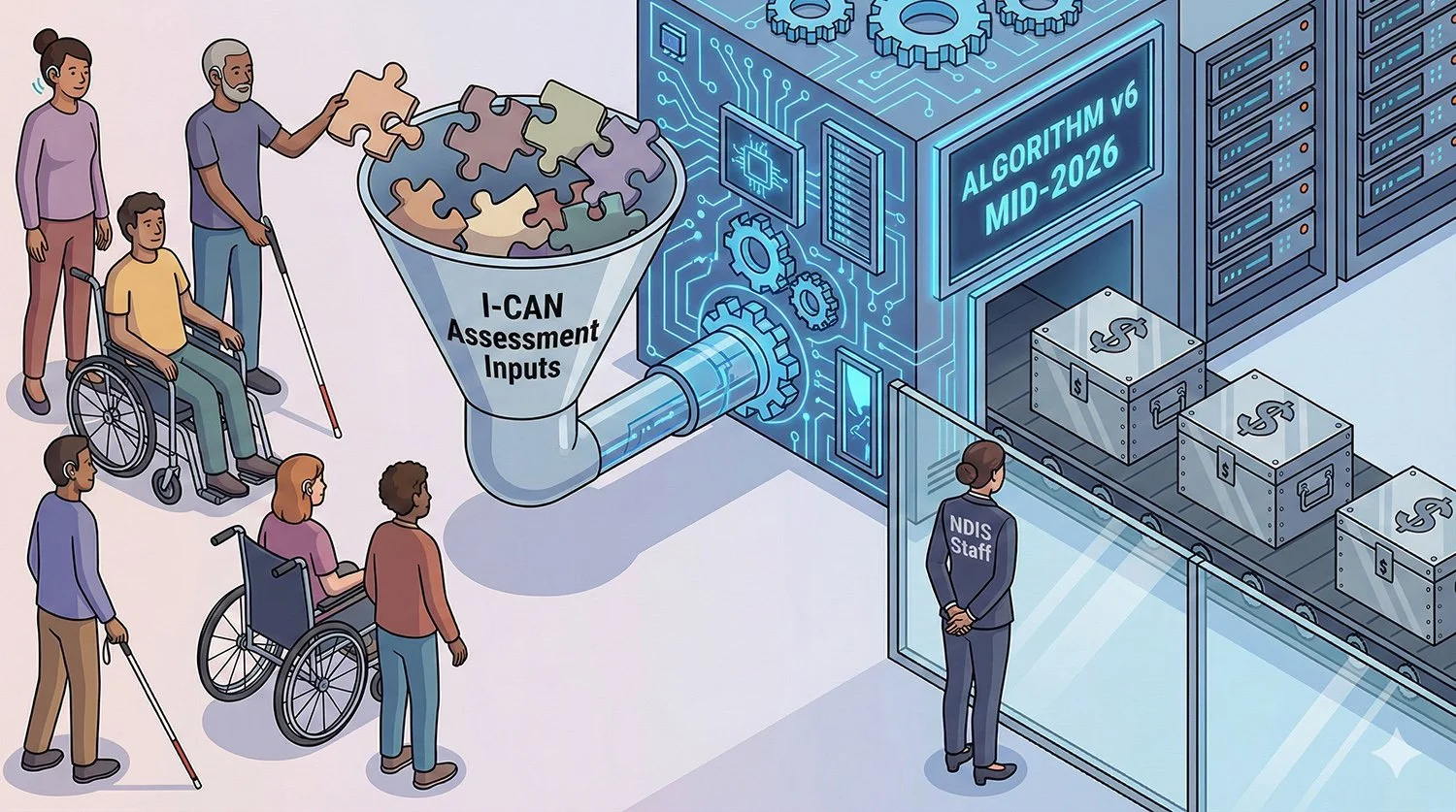

OpenAI’s plan is built around an AI driven age prediction layer, supplemented by age verification flows when needed.

OpenAI says it is “building towards” a long term system that estimates whether someone is over or under 18 so the ChatGPT experience can be tailored accordingly, including blocking graphic sexual content for users under 18.

In its “Teen safety, freedom, and privacy” post, OpenAI adds that if there is doubt, it will default to the under 18 experience, and in some cases or countries it may also ask for ID, acknowledging the privacy tradeoff for adults.

On the operational side, OpenAI’s Help Centre describes two distinct pathways that can lead to age checks:

Mandatory age verification in some locations (legal requirement): OpenAI says some users will see a login banner prompting age verification, with 60 days to complete it before access is blocked until verification is done. It says it relies on third party service Yoti, and depending on method, users may be asked for a selfie, a government ID upload, or use the Yoti app. Users aged 13 to 17 need a parent or guardian to complete the check on their behalf.

Age prediction places an account into a protected experience: If OpenAI’s age prediction estimates a user is under 18, it says the user can keep using ChatGPT in a protected experience and does not have to verify age unless they want access to the standard adult experience. OpenAI also describes using Persona for verification in this flow and notes it uses behavioural and account level signals (such as when a user tends to be active and how long the account has existed) to estimate age.

Why OpenAI is Loosening Restrictions Now

Altman’s Reuters remarks position this as a reversal from a period where ChatGPT was made “pretty restrictive” to be careful with people in mental distress. He said OpenAI had new tools to mitigate those risks and would relax restrictions “in most cases,” while expanding what verified adults can do.

From a product and safety engineering perspective, OpenAI’s latest system documentation also points to increased focus on evaluation in sensitive areas. In the GPT 5.2 system card update, OpenAI reports improved performance on suicide and self harm, mental health, and emotional reliance offline evaluations compared with GPT 5.1.

GPT 5.2 Signals How Policy and Model Behaviour May Shift

OpenAI’s GPT 5.2 system card includes a direct statement that its internal testing found GPT 5.2 Instant “generally refuses fewer requests for mature content”, specifically sexualised text output, while saying this does not impact other types of disallowed sexual content or content involving minors.

The same section says OpenAI applies additional protections for users it knows to be minors, reducing access to sensitive content categories including violence, gore, sexual content, romantic or violent role play, and extreme beauty standards, and that it is in early stages of rolling out its age prediction model so it can apply these protections automatically to accounts believed to be under 18.

Put simply, OpenAI is describing a two part move: fewer blanket refusals for adults in some “mature” categories, alongside stronger AI based routing and extra guardrails for minors.

How This Compares with Other AI Platforms

Competitors vary widely on adult content allowances and how they frame safety boundaries.

Google: Google’s Generative AI Prohibited Use Policy lists “sexually explicit content” (for example content created for the purpose of pornography or sexual gratification) under prohibited activities.

Anthropic: Anthropic has stated its usage policy prohibits sexually explicit content and says it has safeguards intended to prevent sexual interactions.

Character AI: Character AI has moved in the other direction for minors, with The Verge reporting it would phase in a ban on open ended chats for under 18 users and use an in house age assurance model, while directing adults mistakenly flagged as minors to Persona for verification.

This context matters because OpenAI’s approach appears aimed at expanding adult capability without moving to a fully permissive default, using automated age estimation as the gatekeeper rather than a simple “click yes” prompt.

What IT Teams and Users Should Watch

A few practical points are likely to drive real world impact more than the adult mode label itself:

False positives and friction: OpenAI’s own materials make clear it will “play it safe” and default uncertain users to the under 18 experience, which can create verification friction for adults who get misclassified.

Privacy tradeoffs: OpenAI explicitly frames ID checks as a privacy compromise in some cases, while also describing options like selfie based estimation in some locales and guidance that some ID numbers can be redacted during verification.

Regional differences: The Yoti based banner flow is described as location dependent to meet legal requirements, so rollout and user experience may differ by country and even by state or territory depending on how rules are applied.

We are a leading AI-focused digital news platform, combining AI-generated reporting with human editorial oversight. By aggregating and synthesizing the latest developments in AI — spanning innovation, technology, ethics, policy and business — we deliver timely, accurate and thought-provoking content.