OAuth Security Guidance Gets Sharper as AI Assistants Plug into More Services

Image Credit: Jacky Lee

The protocol behind many “connect your account” buttons is getting renewed scrutiny as AI products increasingly move from chat to action. OAuth 2.0, the IETF authorisation framework first standardised in 2012, is designed to let an application obtain limited access to an HTTP service without asking the user to share a password.

In January 2025, the IETF published RFC 9700, a Best Current Practice document that updates OAuth 2.0 security advice based on years of real world deployment and newer threats, and it explicitly deprecates some less secure modes of operation. In parallel, work continues on OAuth 2.1, an in progress Internet Draft dated 19 October 2025 that aims to replace and obsolete RFC 6749 (OAuth 2.0) and RFC 6750 (Bearer Token Usage).

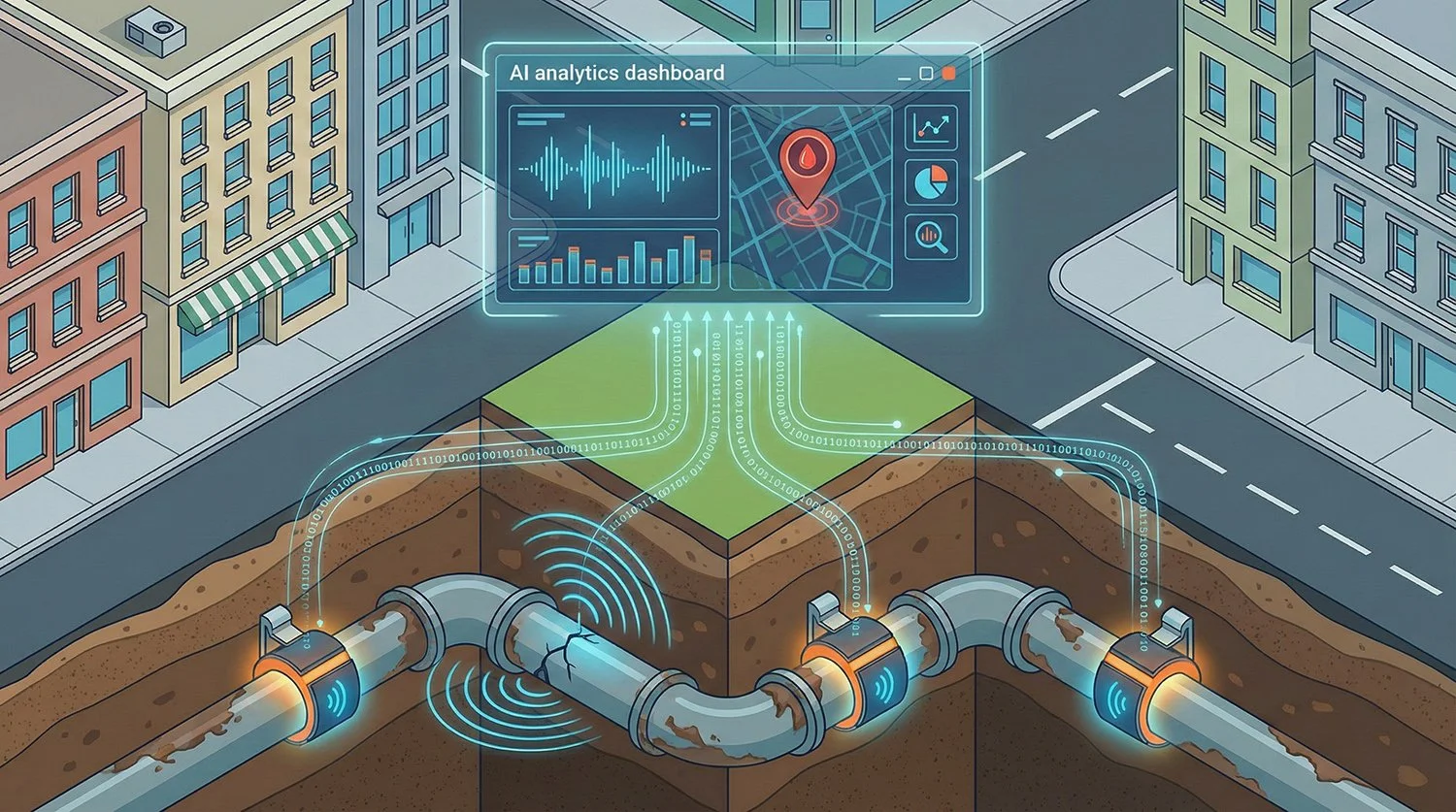

For AI tools, the significance is practical: once an assistant can read files, send emails, create calendar events, or trigger workflows, the access token becomes a high value asset. OWASP’s guidance for large language model applications highlights risks like prompt injection and insecure output handling, both of which can become pathways to unintended actions or data exposure when an LLM is connected to downstream systems.

What OAuth Is

OAuth 2.0 is an authorisation framework that enables a third party application to obtain limited access to a service, either on behalf of a resource owner through an approval interaction, or on its own behalf in certain machine to machine scenarios.

A key point for readers is what OAuth is not. OAuth does not define authentication by itself. OpenID Connect is a separate specification that describes itself as an identity layer on top of OAuth 2.0, enabling clients to verify the identity of an end user and obtain basic profile information in an interoperable way.

How OAuth Typically Works

In a common setup, a user is redirected to an authorisation service, reviews what an app is requesting, and approves access. The app then receives tokens that can be presented to a resource server to access protected APIs. OAuth uses “scope” values to express what level of access has been granted.

Bearer tokens are a central building block in many deployments. RFC 6750 describes how bearer tokens are used in HTTP requests and warns that any party in possession of a bearer token can use it to access the associated resources, so tokens must be protected from disclosure in storage and transport.

OAuth also supports multiple flows for different environments. One example that has become common on consumer devices is the OAuth 2.0 Device Authorization Grant, designed for internet connected devices that lack a browser or have limited input, such as smart TVs and similar form factors.

Revocation and Lifecycle Control

OAuth’s day to day safety hinges on token lifecycle management, not just the initial consent screen. RFC 7009 defines a token revocation endpoint that allows clients to notify the authorisation server that a previously obtained access token or refresh token is no longer needed, so credentials can be cleaned up and the token invalidated.

This matters for AI connected apps because users often trial multiple tools, connect accounts briefly, and expect disconnect to actually cut access. Token revocation offers a standard mechanism for that outcome when implemented by the provider.

Why AI Puts OAuth Back in the Spotlight

AI assistants increasingly act as orchestration layers over many systems. That increases two security pressures at once:

More permissions in play: assistants may request broad scopes to be useful, especially across email, files, tickets, and messaging.

More chances for indirect control: OWASP describes prompt injection as a way for crafted inputs to manipulate model behaviour, and insecure output handling as insufficient validation and handling of model generated outputs before they are passed to other components.

In an OAuth context, the practical risk is not that OAuth is “broken”, but that an LLM connected to tools can be tricked into using valid tokens in unsafe ways, or into emitting data that later exposes tokens or sensitive outputs in logs, links, or downstream systems. The takeaway for AI product teams is that OAuth implementation details and tool permission design become part of the AI safety surface, not just identity plumbing.

The Security Direction since 2025

RFC 9700 positions itself as Best Current Practice, explicitly updating the threat model and security advice given in earlier OAuth documents and deprecating some less secure modes of operation. This document is often read as a signal of where OAuth deployments are expected to land: fewer legacy shortcuts, more defence in depth for token theft, redirect abuse, and misuse.

Several related standards and extensions are also worth watching in AI heavy deployments:

PKCE (RFC 7636): mitigates the authorisation code interception attack for OAuth public clients using the authorisation code grant.

Pushed Authorization Requests (RFC 9126): allows clients to push the authorisation request payload directly to the authorisation server and then use a request URI reference in the front channel, reducing exposure of request parameters via the browser path.

DPoP (RFC 9449): sender constrains OAuth tokens using a proof of possession mechanism at the application level, enabling detection of replay attacks with access and refresh tokens.

Rich Authorization Requests (RFC 9396): introduces

authorization_detailsto carry fine grained authorisation data, expanding beyond a simple scope string model.

For AI assistants, these pieces map to a clear trend: reduce token replay value, reduce the amount of sensitive authorisation detail exposed in the browser redirect path, and tighten permissions so the assistant can do what it needs without inheriting broad account wide power.

OAuth 2.1: Consolidation Rather Than Reinvention

OAuth 2.1 remains an Internet Draft as of 19 October 2025. The draft states it replaces and obsoletes OAuth 2.0 (RFC 6749) and bearer token usage (RFC 6750), signalling a consolidation of commonly used pieces and a push away from older patterns.

In practical terms, this is less about a brand new protocol and more about standardising the safer defaults that many security teams already expect, particularly as OAuth has become a dependency for high value services and automated tooling.

How OAuth Compares to Nearby Standards

OAuth overlaps with other identity and access standards, but the boundaries are well defined in the specs:

OpenID Connect: an identity layer on top of OAuth 2.0, used when a system needs to verify user identity and obtain profile information.

SAML 2.0: an OASIS standard that defines an XML based framework for describing and exchanging security information using assertions across domain boundaries, often in federation contexts.

For AI products, the design choice is often not “OAuth versus everything”, but which combination best matches the job: OpenID Connect for sign in and identity, OAuth for delegated API access, and additional controls for high risk actions.

What to Watch Next for AI Connected Apps

Security guidance for AI systems is increasingly framed as lifecycle risk management. NIST describes its AI Risk Management Framework as a voluntary approach to manage risks and incorporate trustworthiness considerations into the design, development, use, and evaluation of AI products and systems.

Applied to OAuth connected assistants, that points to predictable priorities: least privilege permissions, clear consent, safe action execution, strong token handling, and monitoring for abuse patterns. The direction of travel in the OAuth ecosystem, as reflected in RFC 9700 and the OAuth 2.1 draft, aligns with that risk based posture.

Source: RFC 6749 OAuth 2.0 Authorization Framework, RFC 9700 OAuth 2.0 Security Best Current Practice, Draft OAuth 2.1, RFC 7009 Token Revocation, RFC 7636 PKCE, RFC 9126 Pushed Authorization Requests, RFC 9449 DPoP, RFC 9396 Rich Authorization Requests, OWASP Top 10 for Large Language Model Applications, NIST AI Risk Management Framework 1.0 Overview, OpenID Connect Core 1.0, OASIS SAML Technical Overview

We are a leading AI-focused digital news platform, combining AI-generated reporting with human editorial oversight. By aggregating and synthesizing the latest developments in AI — spanning innovation, technology, ethics, policy and business — we deliver timely, accurate and thought-provoking content.