Australia Targets AI Chatbots with New Child Safety Rules

Image Crdeit: Sanket Mishra | Splash

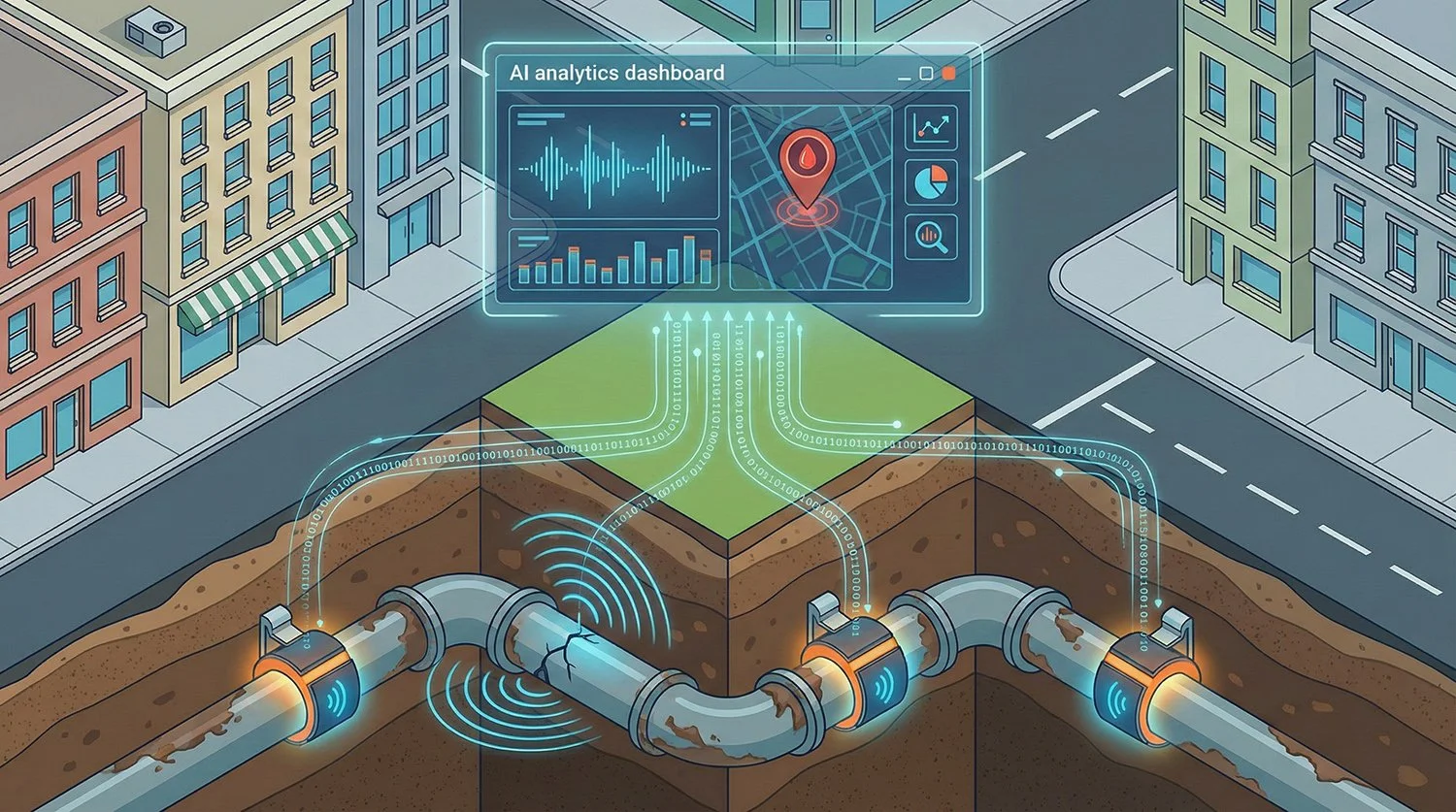

An Australian 9News report published on 30 December 2025 said AI chatbots have become a fresh focus for Australia’s online safety rules, citing eSafety Commissioner Julie Inman Grant and describing risks for children when conversational AI systems surface harmful material. The report said the intent is to stop AI bots from serving certain harmful content to minors, and framed the shift as part of a wider push to strengthen online protections for young people.

What Is Actually Changing in Australia

Australia’s online safety approach is being implemented through a set of industry codes registered under the eSafety regulatory framework, with requirements starting on different dates depending on the service category.

The register shows one group of codes, including the search engine, hosting, and internet carriage service codes, commenced on 27 December 2025. A second group, including codes for relevant electronic services, app distribution services, generative AI services, device manufacturers, and others, commence on 9 March 2026.

That staged rollout matters for readers, because it means some parts of the regime are already in force, while obligations that more directly capture many chatbot style products sit in the tranche commencing in March 2026.

Where AI Chatbots Fit in The Formal Code Text

In the registered code schedule for Relevant Electronic Services, the text explicitly discusses AI companion chatbot features and requires providers to assess risk in relation to restricted content categories, including self harm material, and to record risk assessment decisions.

The same schedule also points to compliance measures that can include controls such as age assurance and access control where feasible and reasonably practicable for services whose purpose involves sharing certain high risk material, and sets out options for preventing the generation of restricted categories of material in higher risk scenarios.

This is the practical link between the “AI chatbots” framing and the regulatory reality: the rules are not only about traditional platforms, they are written to address AI enabled features where children could be exposed to, or generate, restricted material.

eSafety’s Enforcement Signal

Separately, eSafety has already used formal regulatory powers to pressure test AI companion products. In October 2025, the Commissioner announced legal notices issued to four providers of AI companion chatbot services, requiring information about steps taken to protect Australian users, particularly children, from harmful content. The announcement also set out potential civil penalties for non compliance.

How This Intersects with Mental Health Risks

From a mental health and youth safety perspective, the policy focus is on reducing pathways where vulnerable users can be exposed to, or be nudged toward, harmful material through persuasive conversation, faux emotional intimacy, or high frequency engagement patterns.

The code text’s emphasis on “Australian children” and on restricted categories including self harm related material is consistent with that direction of travel. At the same time, the 9News report uses broader language (including terms like suicidal ideation and disordered eating) as part of how the harms are described publicly, which can differ from the exact labels used inside the code schedules.

Similar Safety Moves Elsewhere

Australia is not alone in putting conversational AI and companion style systems under sharper scrutiny.

In California, analysis of SB 243 describes a framework aimed at “companion chatbots”, including disclosure and safety protocol expectations, with specific attention to suicide and self harm related interactions.

In the UK, Ofcom guidance under the Online Safety Act emphasises preventing children from encountering harmful categories including suicide and self harm content, using tools such as highly effective age assurance where required.

In the EU context, Italy’s data protection authority has taken enforcement action linked to age assurance and risks to minors involving an AI chatbot product, including a significant fine reported by Reuters.

We are a leading AI-focused digital news platform, combining AI-generated reporting with human editorial oversight. By aggregating and synthesizing the latest developments in AI — spanning innovation, technology, ethics, policy and business — we deliver timely, accurate and thought-provoking content.