Shadow AI and AI Agents: The New Identity and Privacy Risk

Image Credit: Jacky Lee

A Partner Content feature published by iTnews on 14 January 2026 argues that identity is shifting from a back end IT function to a board level security and governance issue, largely because AI agents and other non human identities are multiplying inside organisations.

The article, written by Jennifer O’Brien, is based on comments from Stephanie Barnett, Vice President of Presales and Interim GM for Asia Pacific and Japan at Okta, who appeared on the Identity Edge iTnews podcast.

While the iTnews piece is sponsored content, the core risk it describes is clear and widely recognised: once AI powered workflows expand, the number of identities that can access systems grows quickly, and privacy exposure rises if those identities are not governed.

What the iTnews Feature Reported

The iTnews feature highlights results from Okta polling at regional events, positioning them as a snapshot of current enterprise readiness in Australia and the wider region. It reported that: 41 percent of organisations said no single person owns AI security risk, 18 percent said they can detect if an AI agent behaves unexpectedly, and 10 percent said they know how to secure non human identities. It also described shadow AI as the biggest blind spot for Australian organisations.

The feature also reports a board level awareness gap. It attributes to Barnett that 70 percent of boards are aware of the risk, while 28 percent are fully engaged, and it frames that gap as an accountability problem rather than a lack of awareness.

Because these figures come from event polling rather than an independently published survey methodology, they are best treated as an indicator of what security leaders are reporting in practice, not a definitive national benchmark.

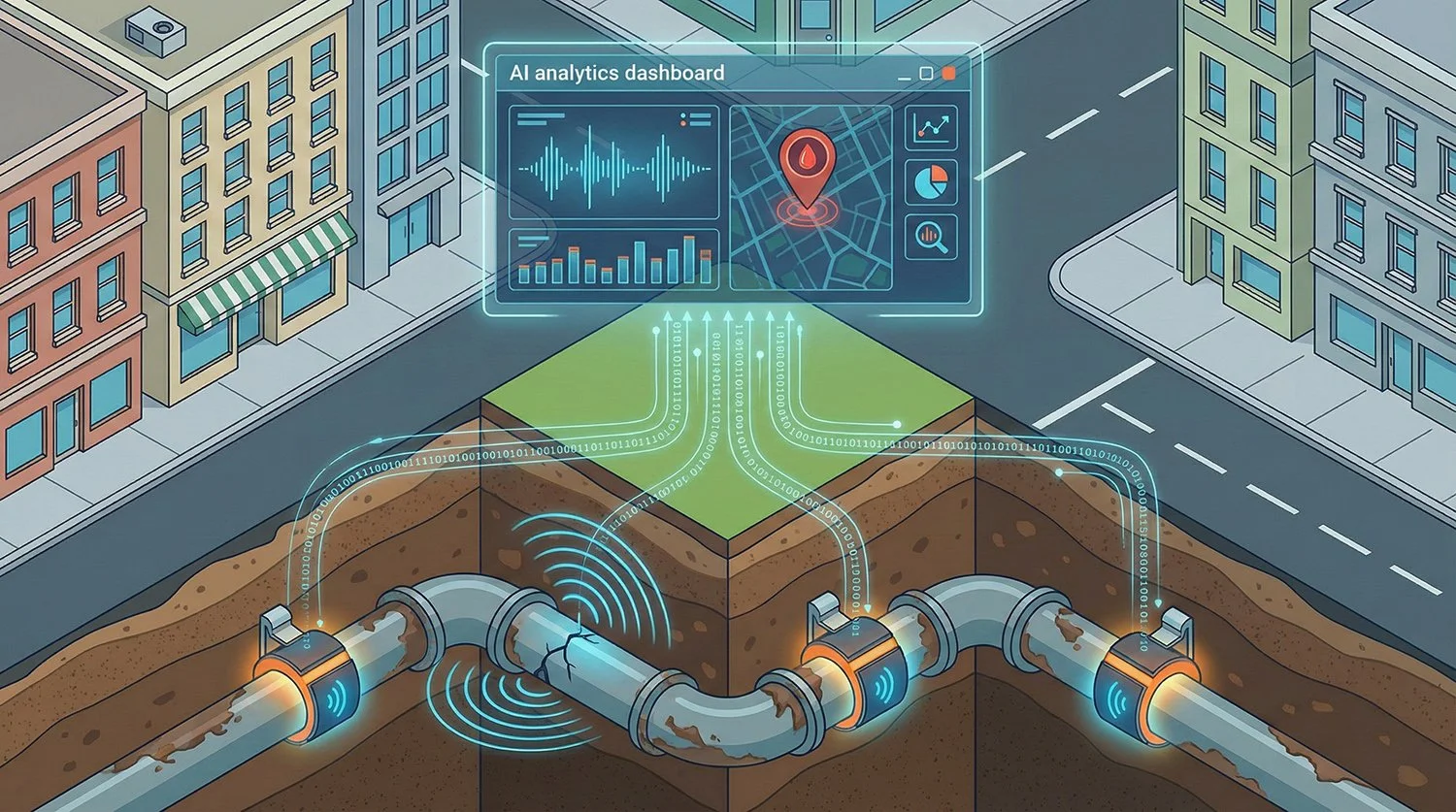

Why AI Agents Change the Identity Equation

The practical change is not just more logins. It is more software acting with authority.

Traditional identity programs mainly dealt with employees, contractors, and customer accounts. AI adoption adds a broader mix, including automation accounts, API integrations, service identities and AI agents that may operate continuously. The iTnews feature describes a surge of AI agents acting autonomously in enterprise environments, alongside expanding APIs and accelerated cloud adoption.

This matters for privacy because identity is a gatekeeper for data access. If an AI agent has permissions to search inboxes, read documents, query CRMs, or pull customer records, then mistakes in access design can turn into unintended data exposure. The privacy issue is often not the model itself, but the combination of broad permissions and limited visibility into what an automated identity is doing.

Shadow AI: the Privacy Risk is Unmanaged Data Flows

Shadow AI usually shows up as staff adopting tools, extensions, or integrations outside formal review. In practice, that can create new places where personal information is copied, processed, or stored without clear controls.

Australian government guidance is blunt on this point. The Digital Transformation Agency staff guidance on public generative AI tools says not to put personal information into public generative AI tools and to assume anything entered could be made public.

The OAIC has taken a similar position. In a blog post published 4 December 2025, the OAIC states that in its October 2024 guidance it advised regulated entities to refrain from entering personal information, particularly sensitive information, into publicly available tools, noting that once personal information is input it can be difficult to track or control and potentially impossible to remove, depending on settings.

Together, these statements put a spotlight on a common privacy failure mode: teams experiment with AI tools to save time, but the organisation cannot clearly answer which tools are being used, what data is being shared, and which external parties can retain it.

Australia’s Cyber Guidance Now Links AI Directly to Privacy Breaches

On the same day as the iTnews feature, the ACSC published guidance for small business on managing cyber security risks from AI, listing “data leaks and privacy breaches” as a key risk category.

The ACSC guidance gives a concrete example: uploading customer or staff details into generative AI platforms without proper anonymisation can expose sensitive or private information.

This is relevant to larger organisations too, because it captures a simple reality: AI tools often need access to data to be useful. If identity controls and internal policies do not keep pace, privacy and security issues become more likely, even without a sophisticated attacker.

Identity Controls and the Essential Eight Connection

The iTnews feature explicitly links identity controls to Australia’s Essential Eight maturity expectations, calling out phishing resistant multi factor authentication and privilege reduction as central to resilience.

The Essential Eight maturity model itself includes requirements for phishing resistant multi factor authentication in multiple contexts, including online services, systems, and data repositories.

For AI related privacy risk, the connection is straightforward. If staff credentials are stolen, or if privileged access is poorly controlled, unauthorised access to systems that contain personal information becomes easier. Strong authentication and controlled privilege reduce the chance that a single compromised identity becomes a broader data exposure event.

How the Industry is Responding: Agent Identity Products and Secure by Design Standards

The wider market has started building for this agent reality, and not just in vendor messaging.

Major platforms are formalising “agent identities”

Microsoft’s documentation describes agent identities in Microsoft Entra as accounts used by AI agents. It notes that agent identities do not have passwords and instead use other credentials types such as managed identities, federated credentials, certificates, or client secrets.

This is one example of the direction enterprise identity is heading: treating agents as first class identities with lifecycle management, accountability, and auditability.

Standards groups are trying to reduce complexity and optionality

The OpenID Foundation’s IPSIE working group is developing secure by design profiles of existing specifications, with a stated goal of achieving interoperability and security by design by minimising optionality in specs used in enterprise implementations.

This matters because organisations often run multiple identity systems and integrations. Optionality and inconsistent implementations can create gaps, which become harder to detect once automated agents are part of everyday workflows.

Why Credential Based Access Still Matters in 2026

Even as the discussion shifts to AI agents, basic access risks remain a major driver of incidents. Verizon’s 2025 DBIR materials state that about 88 percent of breaches within the “Basic Web Application Attacks” pattern involved the use of stolen credentials.

This is a useful grounding point for the AI story: agents, service identities, and APIs add new access paths, but the security baseline still depends on strong authentication, least privilege, and monitoring across all identities.

What to Watch Next

Three practical trends are emerging from the Australia focused discussion and the broader standards and platform moves:

Governance ownership becomes a first question. The iTnews feature highlights that many organisations still do not have a single owner for AI security risk. Expect more firms to assign clear accountability as AI use expands.

Identity teams will be pulled into AI decisions earlier. AI projects that touch sensitive systems increasingly require identity design, access scoping, and audit planning upfront, rather than bolting controls on later.

More formal rules around what staff can enter into AI tools. Australian guidance is already clear on limiting personal information in public generative AI tools. Organisations that want to use AI safely will likely strengthen internal policies, training, and monitoring aligned to that stance.

License This Article

We are a leading AI-focused digital news platform, combining AI-generated reporting with human editorial oversight. By aggregating and synthesizing the latest developments in AI — spanning innovation, technology, ethics, policy and business — we deliver timely, accurate and thought-provoking content.