University of Melbourne Launches CAIR to Build GenAI Readiness into Professional Degrees

Image Credit: Eriksson Luo | Splash

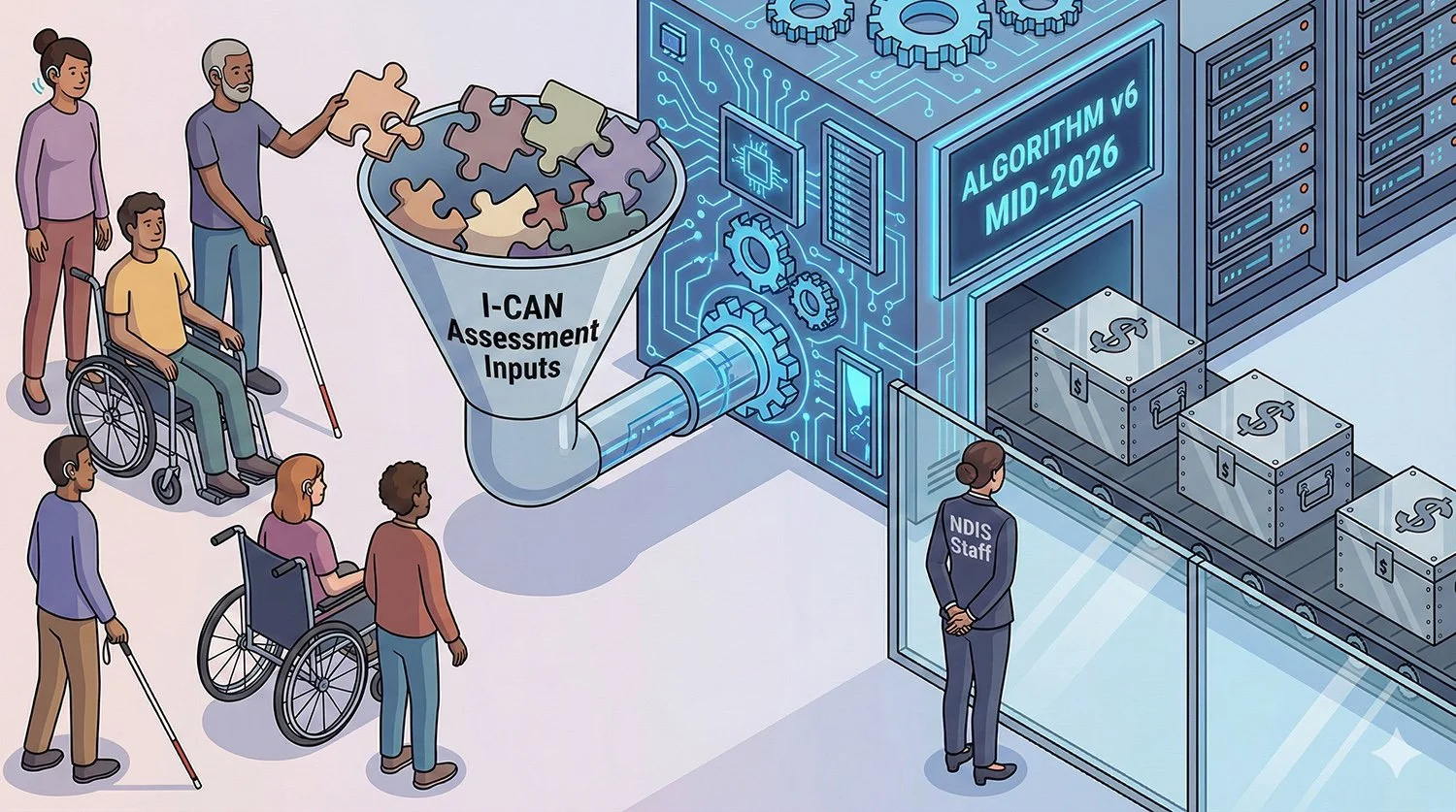

The University of Melbourne has announced a new education focused initiative called CAIR, short for Cross disciplinary AI Readiness for Health, Human Services and Teacher Education. The project is led by Dr Jessica Boyce (Speech Pathology) and is described as a cross faculty collaboration between the Faculty of Medicine, Dentistry and Health Sciences and the Faculty of Education, aimed at incorporating AI use into professional training across multiple disciplines.

What CAIR Is, And Who Is Involved

According to the University of Melbourne’s Melbourne School of Health Sciences announcement dated 17 December 2025, CAIR brings together academics across a range of people facing professions. The published team list spans areas including speech pathology, physiotherapy, dentistry, education, audiology, social work, medicine, optometry, psychological sciences, and nursing.

The page states the work is funded by a University Teaching and Learning Initiative grant, and that the project will focus on the ethical and strategic application of generative AI in healthcare, social care, and education settings.

What CAIR Plans to Deliver

The CAIR announcement describes a plan to create a cross faculty digital learning resource, developed with support from Learning and Teaching Innovation. The stated intention is to roll this resource out across more than 10 programs in early 2027.

In practical terms, that positions CAIR as curriculum infrastructure rather than a single elective or short course. It is framed as something that can be reused across multiple degrees, which matters if the goal is consistent AI capability across professions that have different regulatory environments, privacy obligations, and client safety requirements.

Why The Timing Makes Sense

The University’s rationale is anchored in the idea that generative AI is reshaping healthcare, social care, and education, and that professional courses need AI literacy to match. The CAIR page explicitly links its aims to building student capability to use GenAI applications appropriately, critically evaluate tools in professional contexts, and understand ethical use and potential challenges.

This lines up with a wider Australian shift from ad hoc guidance toward more structured responses. For example, the tertiary regulator TEQSA says it issued a request for information in June 2024 asking all Australian higher education providers for a credible institutional action plan addressing risks to award integrity from generative AI, and it later published a toolkit organised around Process, People and Practice to support ethical integration while managing assessment integrity risks.

The Broader “AI Readiness Gap” Conversation in Australia

CAIR is one example of a bigger pattern: treating AI capability as a core employability skill rather than a niche technical topic.

Jobs and Skills Australia has separately framed generative AI as a labour market and skills transition issue. Its “Our Gen AI Transition” publication positions GenAI as already starting to transform work and skills, and its overview notes that Australia’s approach should be broad and flexible enough to prepare for agentic AI and future developments, not only today’s tools.

On the school pipeline side, the Australian Government’s Department of Education hosts the Australian Framework for Generative AI in Schools, noting Education Ministers endorsed a 2024 review in June 2025 and describing the framework as guidance for responsible and ethical use that benefits students, schools, and society.

Taken together, CAIR sits in the middle of a growing end to end effort: schools guidance, university level integrity and curriculum reforms, and workforce oriented AI training initiatives.

Comparison with Other Recent Education and Training Moves

CAIR is not happening in isolation. A few comparable Australia linked efforts illustrate different approaches to the same problem:

University wide AI literacy modules (Monash): Monash describes a “Foundations in Artificial Intelligence” module that includes hands on activities with generative AI tools and covers practical topics like prompting, evaluating outputs, and acknowledging AI use in academic work. This is closer to a baseline literacy layer that can support many disciplines, whereas CAIR is designed as a professional degree embedded resource spanning specific fields.

Secure enterprise GenAI access plus training (UNSW): UNSW has announced an enterprise agreement with OpenAI to provide 10,000 staff access to ChatGPT Edu, describing it as a secure platform with privacy protections around prompts and data use, and noting a staged rollout supported by training and guidelines. That model focuses on giving staff tools and guardrails at institutional scale, while CAIR focuses on student professional readiness through curriculum design.

Health workforce AI literacy frameworks (University of Queensland): The University of Queensland’s Queensland Digital Health Centre has described an international collaboration to develop and publish an AI literacy framework for health, with planned work including assessing current training, identifying learning needs, and co designing solutions with stakeholders. This is closer to framework and evidence building, while CAIR is positioned as a teaching resource rollout across multiple degree programs.

Industry led AI skills initiatives (OpenAI, CommBank and others): OpenAI’s “OpenAI for Australia” announcement describes a skills initiative with CommBank, Coles and Wesfarmers intended to roll out essential AI skills training to more than 1.2 million Australian workers and small businesses, using OpenAI Academy, with a nationwide rollout beginning in 2026. CommBank has also published details of a program aimed at its small business customers, including AI learning resources and masterclasses co developed with OpenAI alongside broader digital and cyber capability content.

This matters for CAIR because employers and professional bodies are increasingly likely to expect graduates to understand not only how to use AI tools, but also how to justify use, document limits, manage privacy, and maintain accountability.

We are a leading AI-focused digital news platform, combining AI-generated reporting with human editorial oversight. By aggregating and synthesizing the latest developments in AI — spanning innovation, technology, ethics, policy and business — we deliver timely, accurate and thought-provoking content.