Australia Implements AI in Government Policy 2.0: New Accountability Standards

Image Credit: Jacky Lee

Australia’s Policy for the responsible use of AI in government Version 2.0 is now in force for much of the Commonwealth public sector. The Digital Transformation Agency (DTA) published the updated policy on 1 December 2025, and the implementation guidance says Version 2.0 became effective on 15 December 2025, replacing Version 1.1 (effective 1 September 2024).

While this is not legislation, it operates as a whole of government policy with mandatory requirements for covered entities, shaping how agencies approve, document, procure, and oversee AI use cases in day to day operations.

Who Must Follow It?

The implementation page states the policy is mandatory for non-corporate Commonwealth entities under the Public Governance, Performance and Accountability Act 2013, and corporate Commonwealth entities are encouraged to apply it.

It also sets out national security carve outs, stating the policy does not apply to AI use in the defence portfolio and the national intelligence community as defined under the Office of National Intelligence Act 2018.

What The Policy is Trying to Change?

The policy frames government as having an “elevated responsibility” when using AI, and it aims to strengthen public trust through more consistent requirements for transparency, accountability, and risk based oversight of AI use cases.

In practice, the update focuses less on broad principles and more on controls that can be checked: who is accountable, which AI use cases exist, how risks are assessed, and what gets disclosed to the public.

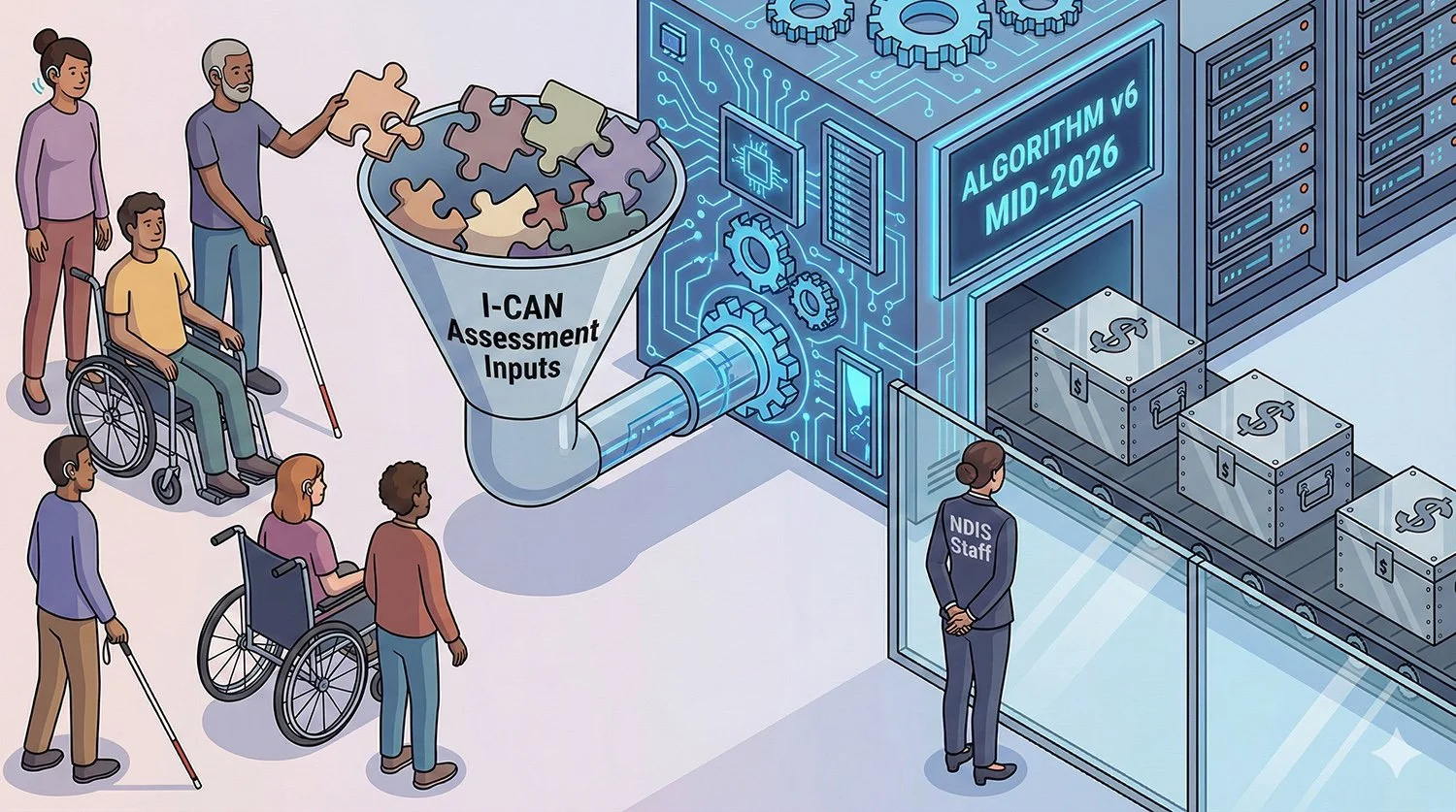

Mandatory AI Impact Assessment for “In Scope” Use Cases

A centrepiece of Version 2.0 is a mandatory AI impact assessment requirement for AI use cases that meet the policy’s in scope criteria. The DTA’s impact assessment tool introduction explicitly links the policy update to a new mandatory requirement to assess impacts for in scope use cases.

The policy also sets out ongoing governance expectations for higher risk deployments. For example, where an agency rates an in scope use case as inherently high risk, it must escalate internally, govern via a designated board or senior executive (as appropriate), and ensure regular review at least every 12 months, alongside reporting through accountable channels.

Accountability Requirements: Named Owners and An Internal Use Case Register

Version 2.0 formalises accountability roles and record keeping. The Standard for accountability requires agencies to establish an internal register of AI use cases that fall within scope, including minimum fields and designated responsibility for each use case.

Separate policy guidance states agencies must create an internal AI use case register within 12 months of the policy taking effect, and must share the register with the DTA every 6 months once created.

For technology teams and vendors working with government, this is a practical shift: AI projects should increasingly come with a clearer owner, a documented risk position, and a traceable lifecycle record.

Transparency Statements: What The Public May See More of

The policy includes a transparency approach intended to help the public understand government AI use at a high level. The policy’s stated aim includes building trust through consistent requirements for transparency and accountability.

In effect, agencies should be moving toward clearer, more standardised public explanations of how AI is used in services, rather than leaving this information scattered across individual projects.

Operational Readiness: Staff Training Becomes a Required Control

The policy also includes workforce readiness measures. One example is a requirement that agencies implement mandatory training for all staff on responsible AI use within 12 months of the policy taking effect, supported by DTA guidance and training resources.

This reflects a common real world issue with AI deployment: risk does not only sit in the model. It also sits in how staff prompt, interpret, override, or rely on AI outputs.

Procurement gets pulled into the policy framework

Alongside the policy refresh, the DTA announced new supporting resources, including a new AI impact assessment tool and procurement guidance aimed at helping agencies buy AI products and services in line with government expectations.

From an IT governance perspective, this matters because procurement is often where AI risk is locked in early, through contract terms, documentation expectations, audit rights, data handling, and supplier obligations.

How Australia’s Approach Compares with Other Government Frameworks

Australia’s Version 2.0 is best read as an administrative governance framework: it sets internal requirements for covered agencies rather than creating economy wide legal duties.

European Union: The EU is implementing binding rules through the EU AI Act. The European Commission recently published a first draft Code of Practice on marking and labelling AI generated content, noting that the related transparency rules are expected to become applicable on 2 August 2026.

United States: The US has pursued executive branch governance through Executive Order 14110 (Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence), published via the Federal Register, setting direction for federal agencies.

United Kingdom: The UK has been building practical assurance methods for public sector teams, including work described by the Central Digital and Data Office on testing and assuring AI in government settings.

The shared trend is familiar: governments are trying to keep AI adoption moving while putting stronger checks around high impact use cases.

Source: DTA, Digital, DTA AI Policy, EU Official Website, Federal Register, Gov UK

We are a leading AI-focused digital news platform, combining AI-generated reporting with human editorial oversight. By aggregating and synthesizing the latest developments in AI — spanning innovation, technology, ethics, policy and business — we deliver timely, accurate and thought-provoking content.